The Journey Matters: Average Parameter Count over Pre-training Unifies Sparse and Dense Scaling Laws

We study LLM sparse pre-training with gradual pruning and find that the average parameter count during pre-training, rather than the final model size, predicts model quality for both sparse and dense pre-training.

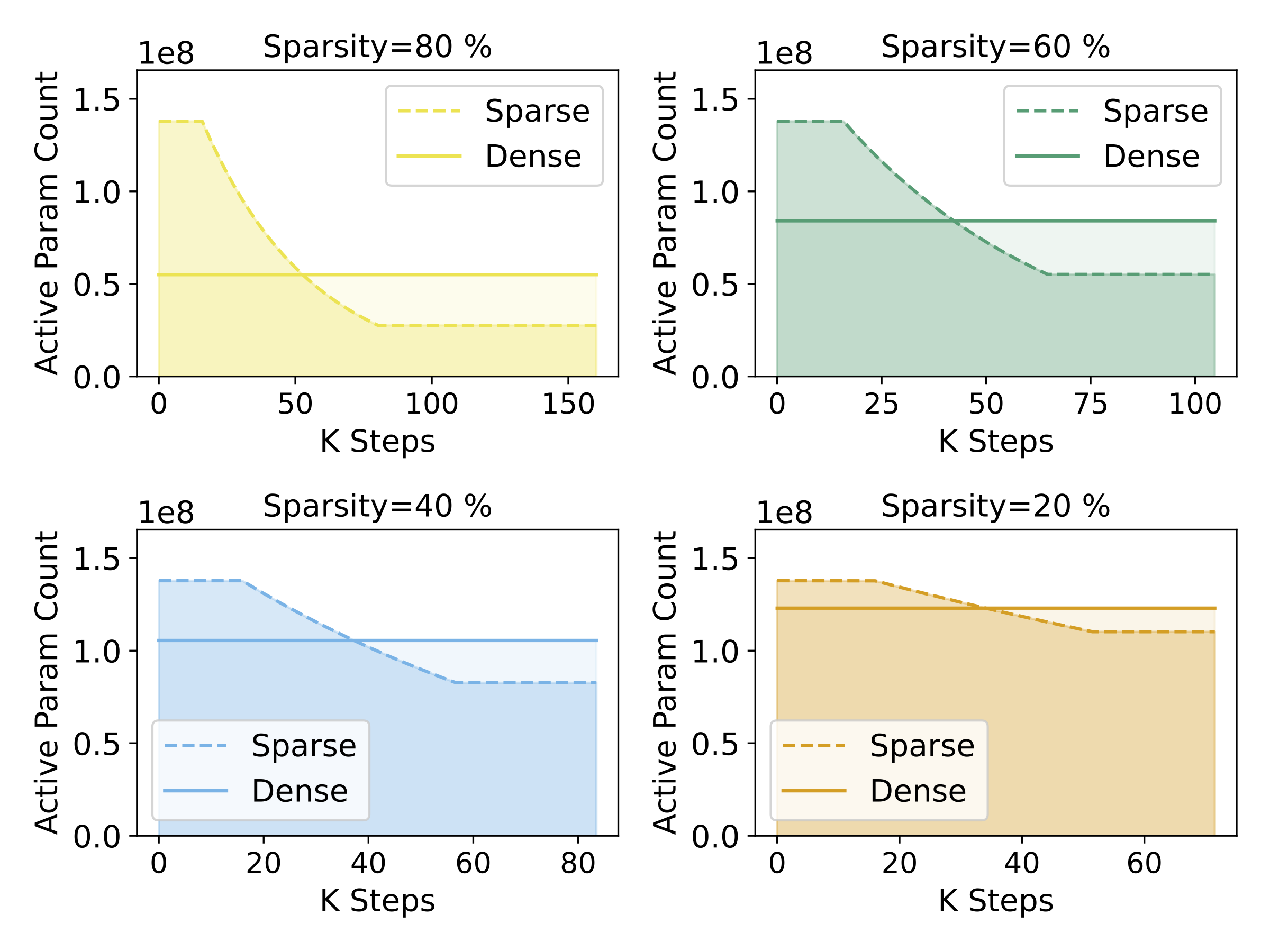

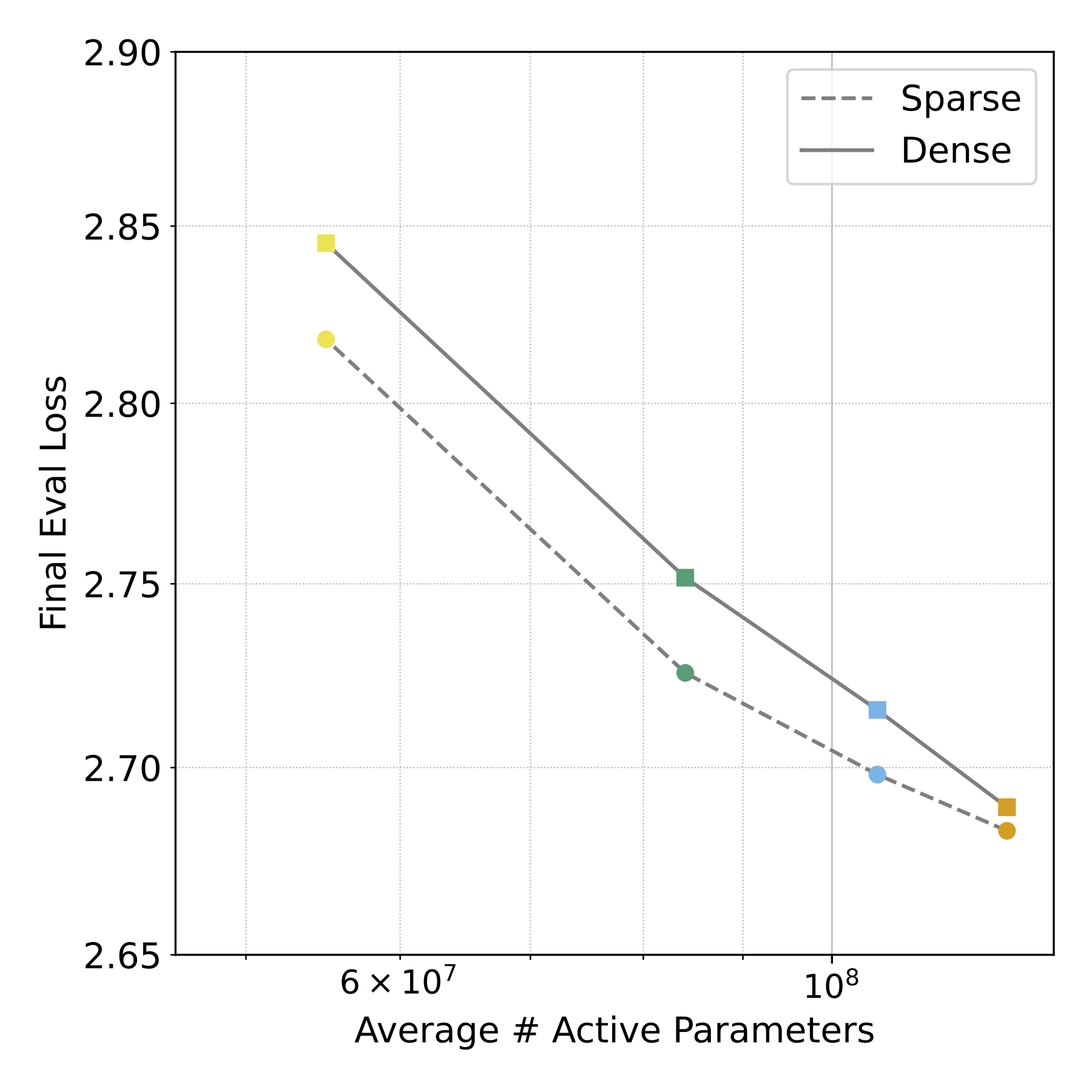

Below we compare 4 pairs of sparse and dense models with matching average parameter counts. The first shows how parameter count changes over training time for sparse vs. dense pre-training. The second shows that sparse pre-trained models (with significantly fewer final parameters) can match the performance of their dense counterparts when they share the same average parameter count during training.

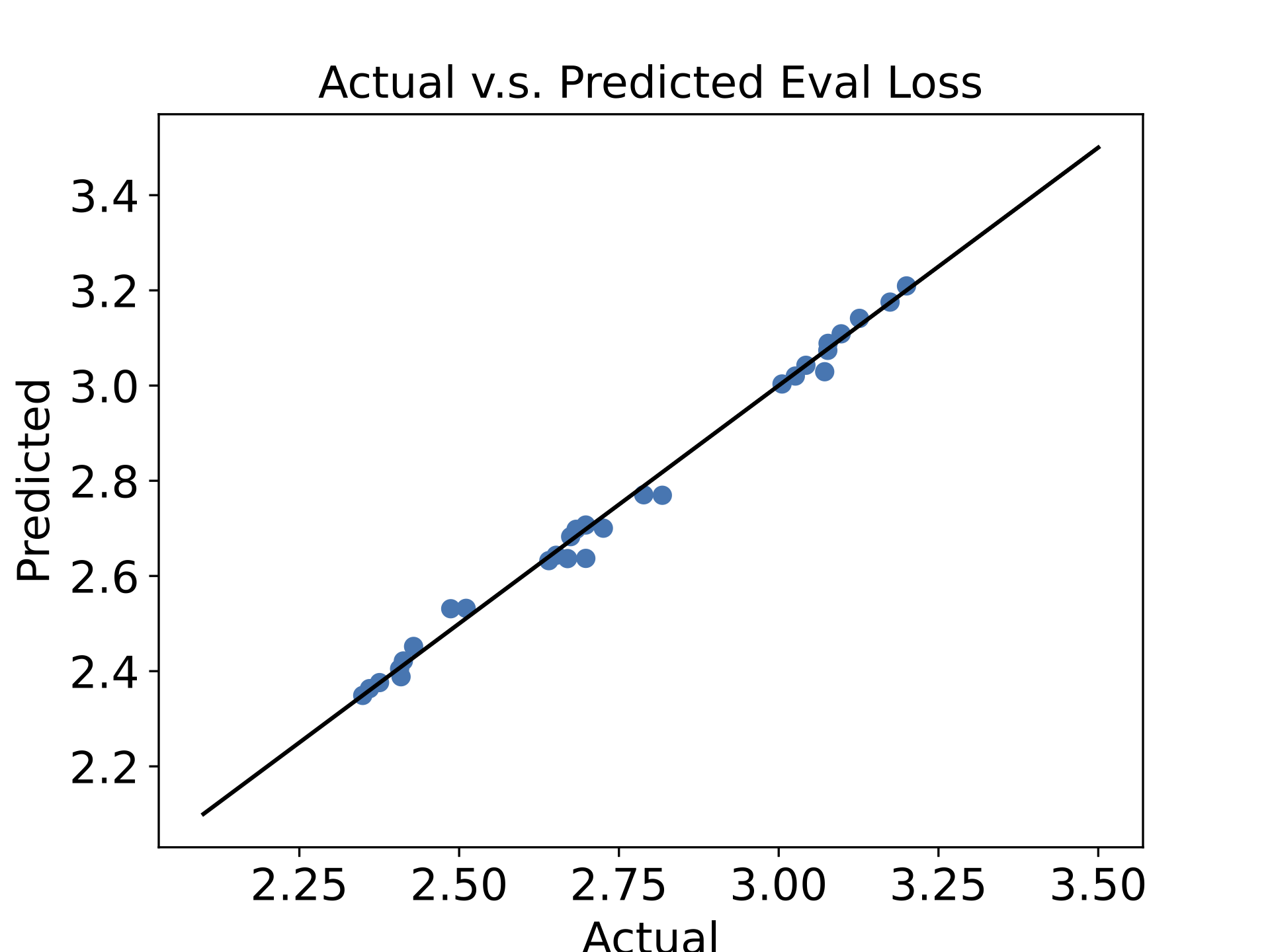

Building on this observation, we propose a modified scaling law that uses the average parameter count over pre-training as the model size term. This approach unifies the scaling laws of both sparse and dense pre-training paradigms. We validated this scaling law across models ranging from 58M to 468M parameters, trained with up to 20x the Chinchilla-optimal token budget. The modified scaling law accurately predicts model performance across both sparse and dense pre-training regimes.

The original Chinchilla scaling law:

\[ L(N, D) = E + \frac{A}{N^\alpha} + \frac{B}{D^\beta} \]

Our modified scaling law using average parameter count:

\[ L(N_{\text{avg}}, D) = E + \frac{A}{N_{\text{avg}}^\alpha} + \frac{B}{D^\beta} \]

where \(N_{\text{avg}} = \frac{1}{D} \int_0^D N(t) dt\) is the average parameter count over training.

The figure above demonstrates the goodness of fit of our unified scaling law. By using average parameter count rather than final parameter count, we can accurately predict model performance across both sparse and dense pre-training regimes. This provides a theoretical foundation for understanding the efficiency of sparse pre-training.

Sparse pre-training effectively decouples average parameter count (which governs quality) from final parameter count (which determines inference costs), thus enabling a new Pareto frontier in the training cost vs. inference efficiency trade-off.

We're releasing 4 pairs of sparse/dense LLMs (~1B parameters) with matching average parameter counts and identical training tokens, at sparsity levels from 20-80%.

| Sparsity | Sparse Model | Dense Model |

|---|---|---|

| 20% | sparsellm-1b-20p | sparsellm-1b-20p-small-dense |

| 40% | sparsellm-1b-40p | sparsellm-1b-40p-small-dense |

| 60% | sparsellm-1b-60p | sparsellm-1b-60p-small-dense |

| 80% | sparsellm-1b-80p | sparsellm-1b-80p-small-dense |

All models are hosted on Hugging Face and can be loaded using the Transformers library.

We are deeply grateful to Elias Frantar, Naveen Kumar, Sanjiv Kumar, Daniel M. Roy, and Clemens Schaefer for their valuable feedback and thoughtful review of this paper. We also acknowledge the critical support provided by the Google CoreML Performance Team, and Google Research during this project. We further recognize the extended team at Google DeepMind, who enabled and supported this research direction. This work was in part supported by the Sloan Foundation, the MIT-IBM Watson AI Lab, Apple, and SRC JUMP 2.0 (CoCoSys).